Hacker News Personal Blogs

Thousands of aggregated blog posts for you to read!

More information

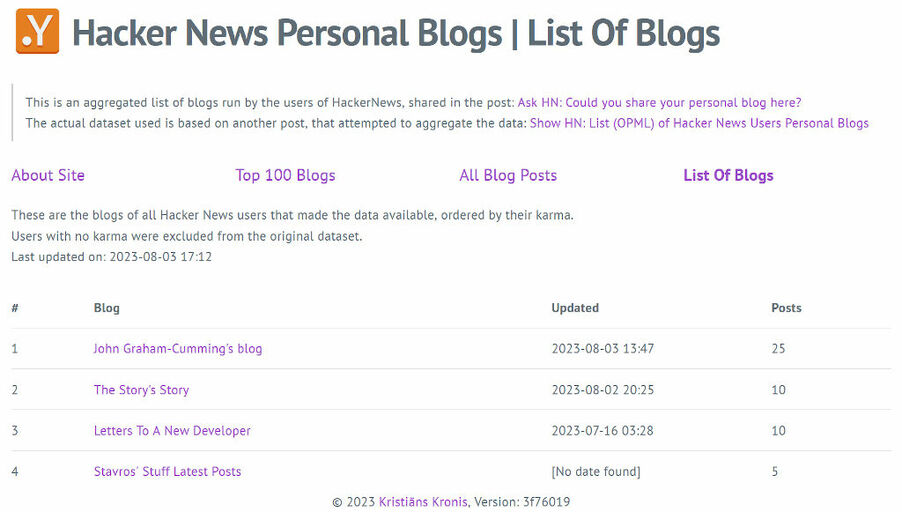

Turns out that you can do a lot with feeds like RSS, if you know where to source the data! The users of the popular Hacker News technology enthusiast site shared links to their personal blogs and someone was kind enough to aggregate links to the corresponding RSS/Atom feeds where possible in a list. So of course I had to put that list to a good use!

What I did

I thought that it'd be nice to create a website that shows the most recent blog posts across over those 1000+ different sites, as well as organize the data by year, or by how popular the blogs are - so that nobody's RSS feed readers would get overwhelmed with the sheer amount of data at hand. After spending a day of focused work with Python and putting my web scraping knowledge to a good use, I succeeded and now there's both a site and a few combined feeds for you to have a look at!

Not only that, but this data is regularly refreshed, where the blogs are queried for their latest posts and this data is statically rendered into HTML and XML files. This means that I can do this generation process whenever I want (currently a few times per day at set intervals) and anyone interacting with the site will be serviced by the web server alone, there isn't even a need for me to put these thousands of records into a database or pay for hosting one. There is no overhead due to PHP, Java or anything like that and with a bit of GZIP compression, all of this is even more manageable!

What I learnt

Now, there were a few things I learnt over the process of creating this whole thing. One was to never trust the data that you receive - generating XML feeds failed for a while due to the fact that someone's blog post from 2009 had a terminal control sequence in it, which is impossible to encode in XML properly. Not only that, but some of the blogs are unreachable and have anything from errors returned, to just connections that hang and TLS/SSL certificate issues, so timeouts matter. In addition, Python is surprisingly memory hungry, so I needed to rent a new server to be able to process the whole dataset in one go, which doesn't speak too highly of the runtime, unforunately. If you want to find out more, feel free to check out the blog post.